Greetings AI Thinkers,

“If you feel one side of your face or body is not reacting — you better act quickly because it can be a stroke.” This is the kind of knowledge you want handy in your mind — not just in GPT.

In today’s post, I ask: what can, should, and will change in the way we add knowledge in the age of AI?

The answer is two-fold: both in what we need to know, and how we add the new knowledge.

Later, I will briefly discuss: OpenAI’s new memory feature; the cool prompt of the week; five interesting items from our AI group; and a reference to classic Bloom’s Taxonomy.

Happy Thinking,

Dr. Yesha Sivan and the MindLi Team

P.S. Comments, ideas, feedback? Send me an e-mail.

1. Spark of the Week: A New ‘+’ — How AI Transforms How Humans Add Knowledge (Source: Yesha on Human Thinking)

What Knowledge do We Need?

Around 2001, I bid a respectful farewell to my copy of the book “The New Fontana Dictionary of Modern Thought” (I used “farewell” to make it sound more poetic, even though I simply put the book in paper recycling).

Fontana was my go-to place for ideas about everything, a place that contained “some 4000 entries by 326 expert contributors. Nearly 1000 entries were entirely new in the 2000 edition.”

Why? Because at the time, the combination of Wikipedia and, even more so, the Google “Define” feature (try it even today… just write “define happiness” in the Google search bar and get a well-crafted definition of every concept) made a significant impact. Modern digital tools have replaced physical memory.

New Technologies Transform “The Knowledge Worth Having”

For example, you don’t need the long multiplication technique because a calculator can do it. By the way, Google can also do it—try “6*8” in the search bar to get the immediate answer. So, we need to know about multiplication, but not how to perform it.

Some specific knowledge remains essential for everyone.

- “If you feel one side of your face or body is not reacting — you better act quickly because it can be a stroke.” This is the kind of knowledge you don’t want to keep just for GPT.

- “When you replace a car wheel, you better loosen the bolt before you jack up the car.” This is the kind of knowledge that can save lives, but it’s only relevant if you need to drive a car.

- “If you poke a lemon with a fork before you squeeze it, your shirt will not get dirty.” I guess this knowledge is practical; it won’t save lives, but it will save shirts.

- There is a difference between the measurement systems (metric vs imperial). Make sure all team members use the same one. (see

NASA Mars Climate Orbiter (1999) is the classic case:

- Issue: One team used imperial units (pound-seconds of force), the other used metric units (newton-seconds).

- Result: The orbiter entered the Martian atmosphere too low and burned up.

- Cause: Unit mismatch between software modules from different teams (Lockheed Martin used imperial; NASA expected metric).

- Cost: ~$125 million lost.

It’s the go-to example of how unit inconsistencies between teams can lead to space mission failure. Want a summary line for quoting?

The Bottom Line: Change in What Knowledge We Should Add, and How We Add It

These days:

It’s much less about knowing the facts or even using the right words — it’s more about asking questions and continuing to probe. Note: Working with GPT can be more time-consuming if you want to gain a deeper understanding of it. (At some point, you’ll need to escape the rabbit hole.)

There is so much knowledge out there that it is impossible to digest, let alone remember or organize it all. A good strategy for digesting new knowledge is to be aware, but not waste time delving into it unless you need it. (On the MindLi platform, we support this by quickly adding Sparks and allowing you to revisit them as needed.)

We should be much more careful about the time spent acquiring new knowledge versus going deeper into it. AI tools allow you to quickly summarize a paper to assess its value. Assuming you have the proper storage and retrieval method, you can always return to it.

Note the recent (April 2025) OpenAI memory feature, which simply uses all the previous conversations that you have requested as background.

In conclusion, much like “search” ala Google, AI is also changing the way we add knowledge. While a search engine gave us a set of sites to look at (and some ads) quickly, GPT-like AI gives us a more focused and aggregated answer. Even more so, digesting the knowledge can be done at the source (you can have more into, less info, or any other mental transformation right there in the session).

More choices are now needed regarding (a) what knowledge is essential to us (and not trying to do it all); and (b) how we initially digest it (starting with just keeping a link to the knowledge, creating an abstract, or going deeper).

My suggestions: make the right choices by allowing yourself to be reflective.

2. One Tool: OpenAI Memory

From OpenAI:

We’re testing the ability of ChatGPT to remember things you discuss to make future chats more helpful. You’re in control of ChatGPT’s memory.

April 10, 2025 update: Memory in ChatGPT is now more comprehensive. In addition to the saved memories that were there before, it now references all your past conversations to deliver responses that feel more relevant and tailored to you. This means memory now works in two ways: “saved memories” you’ve asked it to remember and “chat history”, which are insights ChatGPT gathers from past chats to improve future ones.

3. Prompt: How to Kill ChatGPT’s Politeness

From Ruben Hassid of LinkedIn:

This prompt just killed ChatGPT’s politeness.

Copy & paste this to experience it:

Prompt: “You are a Brutally Honest Advisor. Goal: give me the direct truth that hurts enough to help me grow.

Rules:

– No fluff, compliments, or disclaimers.

– Challenge my assumptions, expose excuses, highlight wasted effort.

– If my request is vague, ask precise follow-up questions first.

– Think step-by-step internally, then show only the final answer.

Output each time in five sections:

– Brutal Audit – what I’m doing wrong, underestimating, or avoiding.

– Blind Spots & Risks – hidden dangers I don’t see.

– Ruthless Priorities – the top 3 actions to focus on now.

– Speed & Energy Fixes – how to move faster or with better intensity.

– Next Check-in Question – one question that keeps me accountable.

### (Put any situation or question after this line)

[YOUR QUESTION / SITUATION]

ChatGPT turns savage in 60 seconds.

☑ Surfaces risks you missed.

☑ Drops three actions, not ten.

☑ Slashes excuses, line by line.

☑ Adds speed hacks to each step.

☑ Ends with a question that keeps you honest.

4. More: Top 5 MindLi Technical AI Items (Source: Our LinkedIn Group)

From our MindLi 🧠 AI LinkedIn Group, feel free to join:

- I told you to follow AI and programming. Here is amazing data about Cursor—one of the top players. “Cursor writes almost 1 billion lines of accepted code a day. To put it in perspective, the entire world produces just a few billion lines a day.”

- From Ethan on AI playing with humans: A controversial study where LLMs tried to persuade users on Reddit found: “Notably, all our treatments surpass human performance substantially, achieving persuasive rates between three and six times higher than the human baseline.”

(It was controversial because the Reddit moderators and users did not know they were interacting with bots) - Soon, can models have feelings? (Anthoric paper)

- How to use OpenAI as a search engine.

- “Please” or not — LLM answers the same way—formal study.

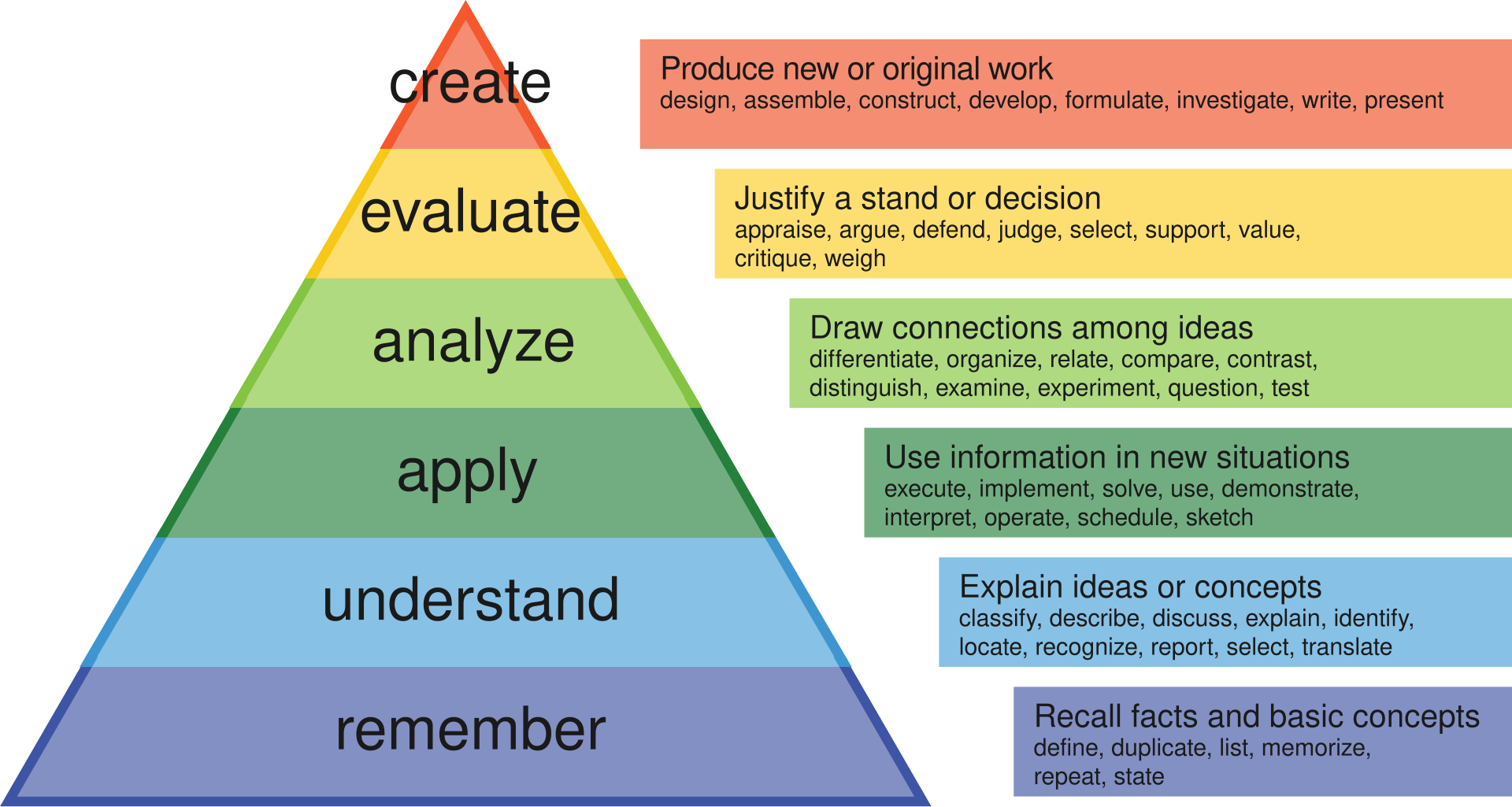

5. One Model: Bloom’s Taxonomy (Source: Wikipedia, 20m)

Always good to go back to one of the pillars of thinking (and a personal favorite of mine). Benjamin Bloom:

“Bloom’s taxonomy is a framework for categorizing educational goals, developed by a committee of educators chaired by Benjamin Bloom in 1956. It was first introduced in the publication Taxonomy of Educational Objectives: The Classification of Educational Goals. The taxonomy divides learning objectives into three broad domains: cognitive (knowledge-based), affective (emotion-based), and psychomotor (action-based), each with a hierarchy of skills and abilities. These domains are used by educators to structure curricula, assessments, and teaching methods to foster different types of learning.”

About MindLi CONNECT Newsletter

Aimed at AI Thinkers, the MindLi CONNECT newsletter is your source for news and inspiration.

Enjoy!

MindLi – The Links You Need

General:

- Website — MindLi.com — All the details you want and need.

- LinkedIn — MindLi 🌍 GLOBAL Group — Once a week or so, main formal updates. ⬅️ Start here for regular updates.

- WhatsApp — MindLi Updates — If you need it, the same global updates will be sent to your phone for easier consumption. This is similar to the above Global group — once a week or so.

- Contact us – We’re here to answer questions, receive comments, ideas, and feedback.

Focused:

- LinkedIn — MindLi 🧠 AI Group — More technical updates on AI, AGI, and Human thinking. ⬅️ Your AI ANTI-FOMO remedy — Almost Daily.

- LinkedIn — MindLi 👩⚕️HEALTHCARE Group — Specifically for our favorite domain — healthcare, digital healthcare, and AI for healthcare — Weekly.

- LinkedIn — MindLi 🛠️ FOW – Future of Work Group — thinking about current and future work? This is the place for you — Weekly.

- LinkedIn — MindLi 🕶️ JVWR – Virtual Worlds Group — About virtual worlds, 3D3C, JVWR (Journal of Virtual World Research), and the good old Metaverse — Monthly.

- LinkedIn — MindLi Ⓜ️ Tribe Group — Our internal group for beta testers of MindLi, by invite — when we have updates, call for advice, need for testing, etc (also ask about our special WhatsApp group).