The AI Challenge: Who Will Pay for Content

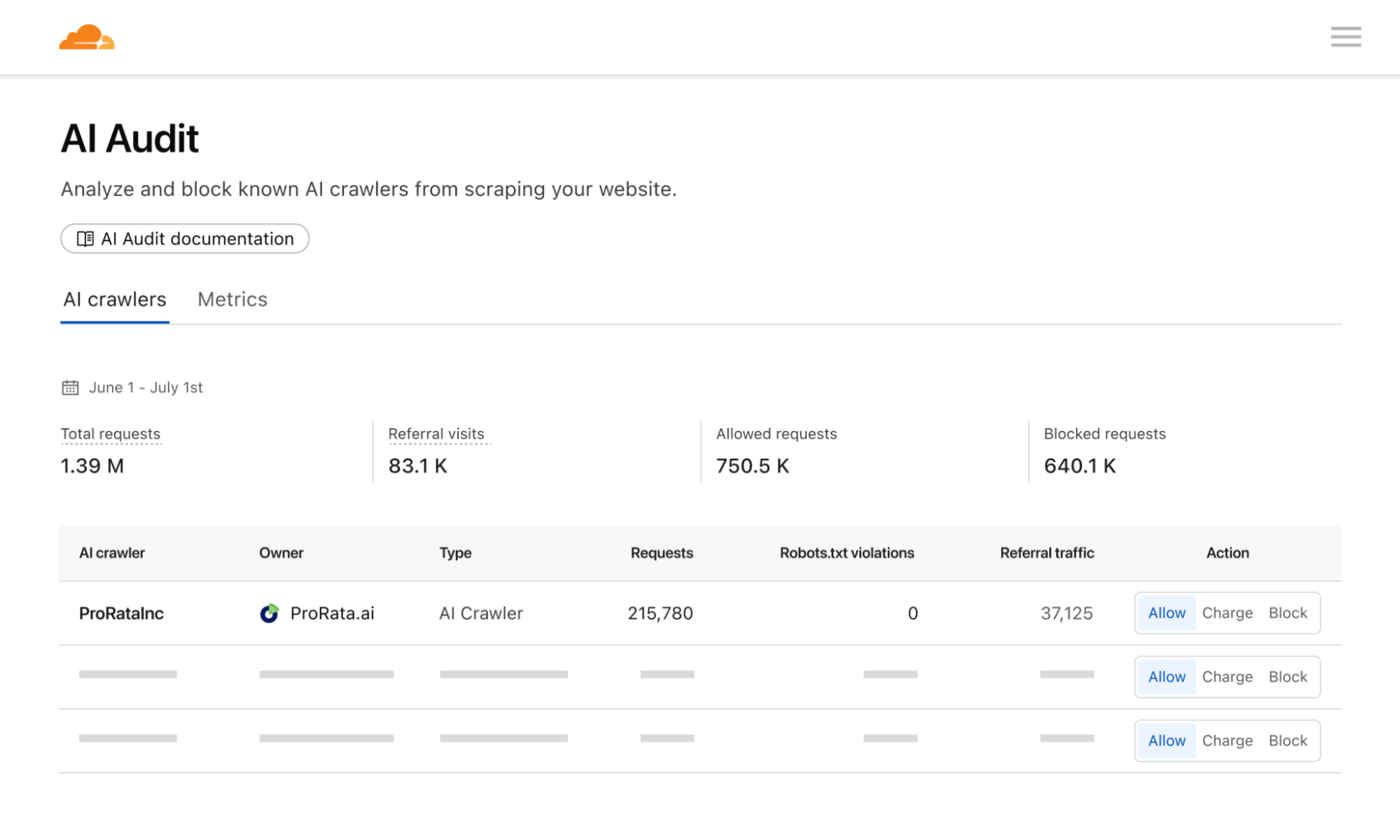

During a groundbreaking move, Cloudflare announced a new business model in closed beta called Pay Per Crawl, allowing website owners to charge AI crawlers for every request to access their content. Has the mechanism finally arrived that will let creators earn from their work in a world where many searches now start with an AI answer?

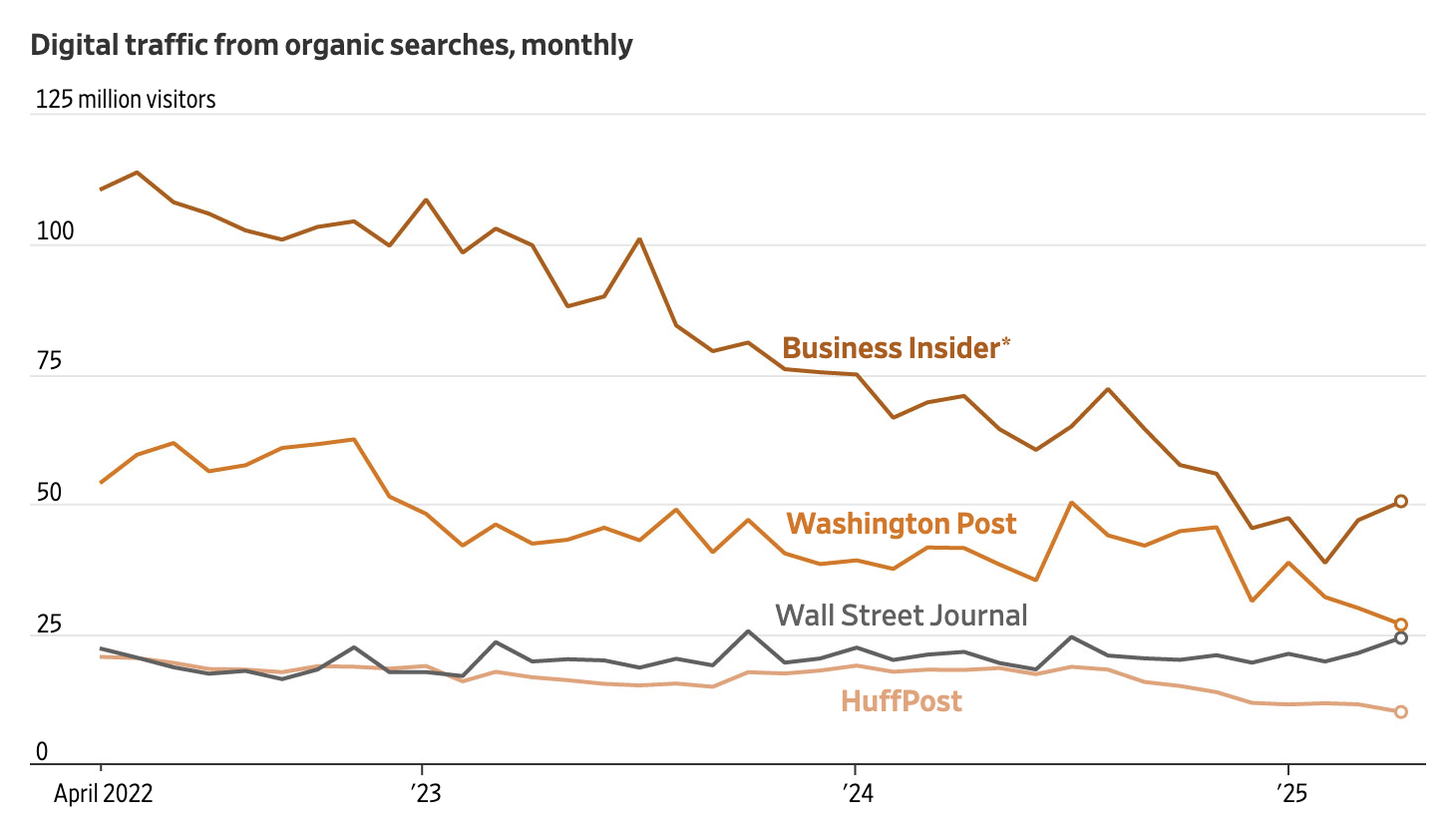

The content world is under siege. AI crawlers read sites and summarize the information, but few users click through to the original links. The result: a sharp drop in traffic to the sites, damage to digital advertising, and a real threat to the economic foundation of content creators. Today, the first technological answer has arrived.

Cloudflare CEO Matthew Prince (Cloudflare CEO) told Axios [2]:

“Artificial intelligence is killing the business model of the internet. People trust AI more than ever, so they don’t bother to read the original content.”

On June 1st, 2025, Cloudflare unveiled a revolutionary fix with the launch of Pay per Crawl. For the first time, website owners can charge AI crawlers (from OpenAI, Anthropic, and others) for access to their content. The mechanism relies on the little-used HTTP 402 Payment Required status code—an architectural add-on to the existing web.

The Unwritten Pact With Creators Is Cracking

Nearly 30 years ago, two Stanford students created Backrub. It became Google and laid the foundations of the internet’s business model.

The informal deal between Google and site owners was simple:

Let us copy your content for search results; we’ll send you traffic so you can monetize through ads, subscriptions, or simply the joy of being read.

Google built the infrastructure: Search generated traffic, DoubleClick + AdSense generated revenue, and Urchin (a web analytics company Google acquired in 2005 that became the foundation of Google Analytics) became Google Analytics so everything could be measured.

For almost three decades, that bargain kept the web alive.

But the deal is eroding. Google queries are declining for the first time ever. What is replacing them? AI.

Matthew Prince’s numbers [2]:

- 10 years ago — Google crawled 2 pages per 1 visitor it sent.

- 6 months ago — Google 6:1 • OpenAI 250:1 • Anthropic 6,000:1

- Today — Google 18:1 • OpenAI 1,500:1 • Anthropic 60,000:1

The trend is clear: Google’s mutual deal is crumbling and creators are left out.

How Pay Per Crawl Works

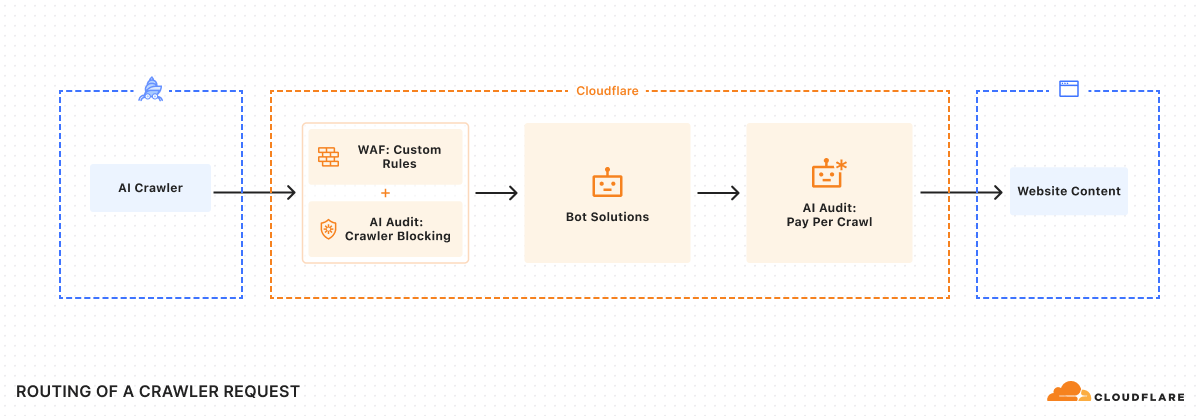

Instead of blocking all crawlers or giving them free access, site owners now have a third option: charge.

For each AI-crawler request, the server can return HTTP 402 with a price. If the crawler agrees, it resends the request with payment intent and receives the content (HTTP 200). If not, access is denied.

In Cloudflare’s dashboard, publishers can set for each crawler:

- Allow free access (200)

- Charge per request (402 + price)

- Block but signal payment is possible (403)

In plain terms, this is a new method where content is both knowledge and a paid asset.

Note, Cloudflare is no garage startup. It powers the CDN for 20 % of the web, from Reddit and Medium to Shopify, Udemy, and The Guardian. If Cloudflare wishes, it can shut AI crawlers out at a massive scale.

It also sees exactly who visits those sites.

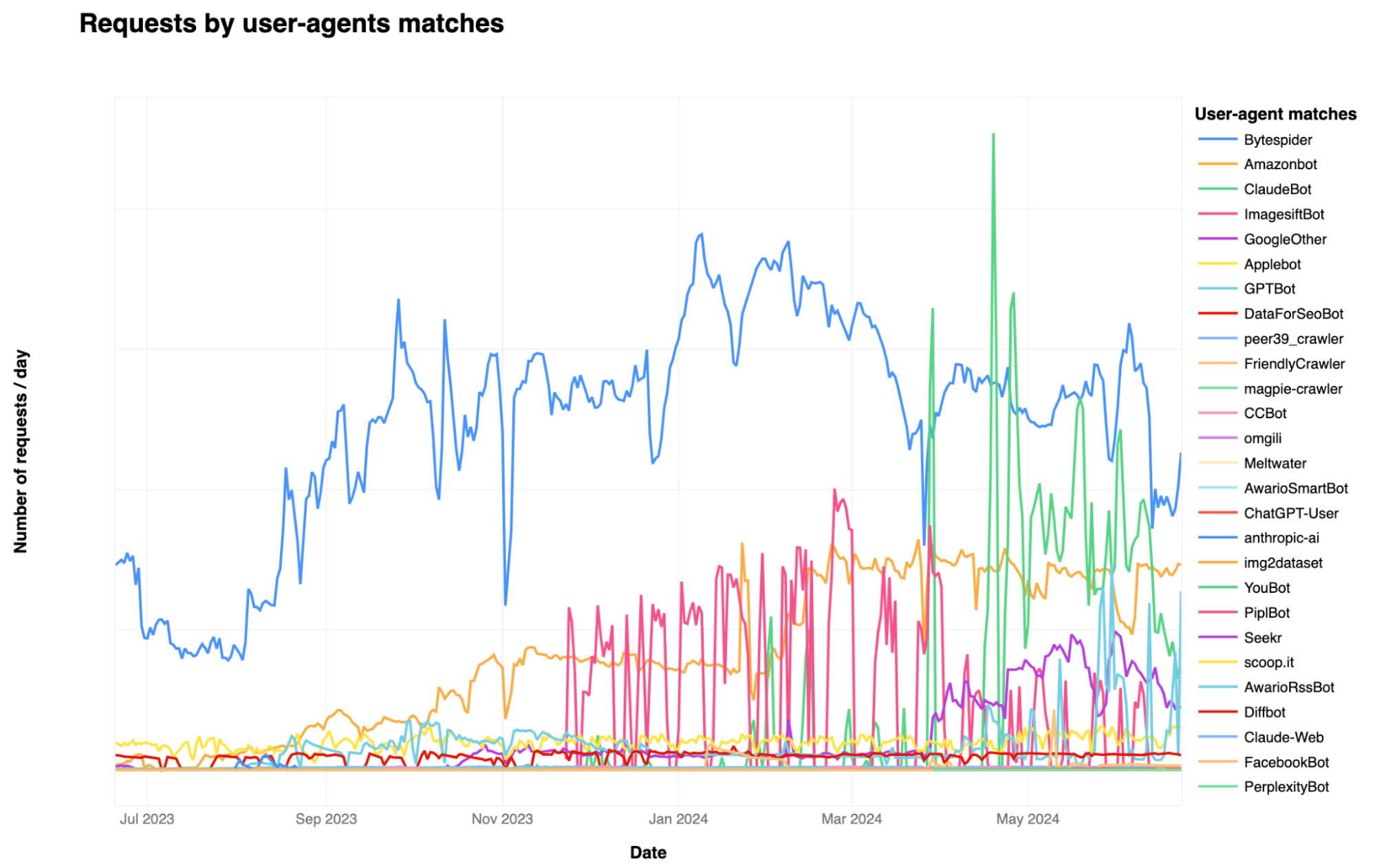

According to Cloudflare, Bytespider (ByteDance) is the most active AI crawler, hitting 40.4 % of protected sites. Next: GPTBot (OpenAI) 35.5 %, ClaudeBot (Anthropic) 11.2 %.

Interestingly, GPTBot by OpenAI, though mainstream, is also one of the most blocked.

Cloudflare adds that many publishers don’t even realize how often AI crawlers visit them, and that the phenomenon is broader than assumed.

Impact on Classic SEO

Cloudflare does not automatically block BingBot, Googlebot, or other traditional search crawlers. The update targets generative-AI crawlers (OpenAI, Anthropic, etc.).

Yet every site owner now could block or charge classical search engines—a power that never existed before.

Will they? Probably not soon: organic Google traffic is still a lifeline, even as The Wall Street Journal recently reported traffic drops due to AI-generated answers.

Blocking Google today would jeopardize crucial traffic. Google thus enjoys a quiet “special status.” If we could rewind SEO history, perhaps free indexing would not have been granted, but that’s hindsight.

Impact on GEO (Generative-Engine Optimization)

Yes—and deeper than it seems.

Take ChatGPT, which searches via Bing. Two distinct back-end stages matter:

- Model training: LLMs learn from a vast corpus gathered by dedicated crawlers (GPTBot, AnthropicBot, etc.).

- Real-time retrieval: After training, the model fetches fresh info via external search (for paid ChatGPT, Bing).

One might say, “If Bing isn’t blocked, ChatGPT can still see my site.” But training builds the model’s general knowledge; real-time search is just a patch.

If more sites block AI crawlers, training data shrinks, leading to:

- Lower answer quality—models lack deep domain familiarity even with real-time search.

- Source bias—models “trust” sites seen during training, so unseen sites may be down-weighted.

- Long-term GEO effects—a narrower, skewed knowledge base. Sites excluded at training may never enter the model’s awareness.

Bottom Line

- As AI use grows, users visit websites far less frequently, leading to significant drops in advertising revenue.

- Visibility in AI answers starts not at search time but at training: does the model even know you? Advanced models charge different costs for training or search.

- Pay Per Crawl by Cloudflare is one example to tackle the challenge of paying for content.

More Information

[1] Pavel Israelsky is a GEO expert with experience in the search industry since 2007. Founder & CEO of Angora Media, a leading digital marketing agency in Israel, and Co-Founder of Chatoptic, LLM visibility software helping brands get discovered in AI-generated answers. https://www.linkedin.com/in/israelsky/

[2] Cloudflare CEO Matthew Prince 17m video for Axios https://www.youtube.com/watch?v=H5C9EL3C82Y&t=168s